Google Gemini AI

Gemini is a cutting-edge, multimodal AI from Google, capable of reasoning across various media, driving chatbots, search enhancements, productivity tools, and device-level interactions.

Before setting up

Before you can connect you need to make sure that:

- You have selected or created a Cloud Platform project.

- You have enabled billing for your project.

- You have enabled the Vertex AI API.

- You have created a service account and generated JSON keys.

Creating service account and generating JSON keys

- Navigate to the selected or created Cloud Platform project.

- Go to the IAM & Admin section.

- On the left sidebar, select Service accounts.

- Click Create service account.

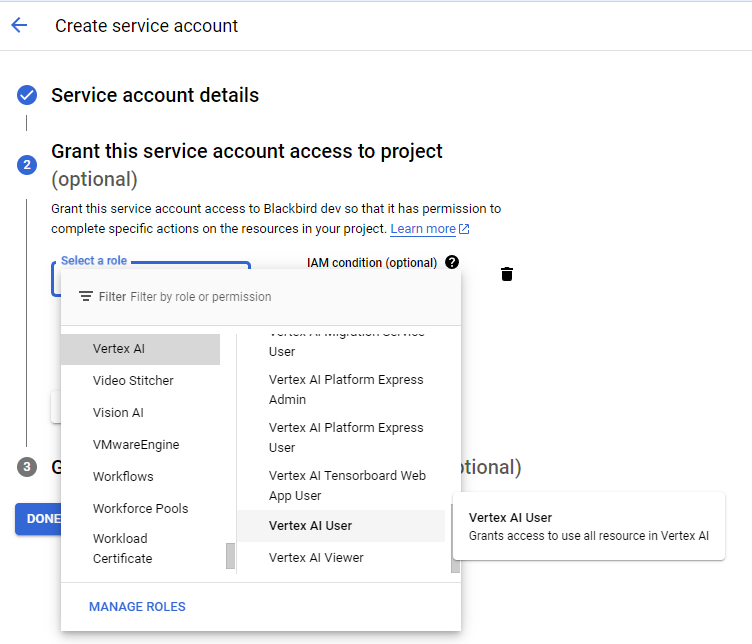

- Enter a service account name and, optionally, a description. Click Create and continue. Select the Vertex AI Administrator or Vertex AI User role for the service account and click Continue.

- . Click Done.

- From the service accounts list, select the newly created service account and navigate to the Keys section.

- Click Add key => Create new key. Choose the JSON key type and click Create.

- Open the downloaded JSON file and copy its contents, which will be used in the Service account configuration string connection parameter.

Connecting

- Navigate to apps and search for Google Gemini AI. If you cannot find Google Gemini AI then click Add App in the top right corner, select Google Gemini AI and add the app to your Blackbird environment.

- Click Add Connection.

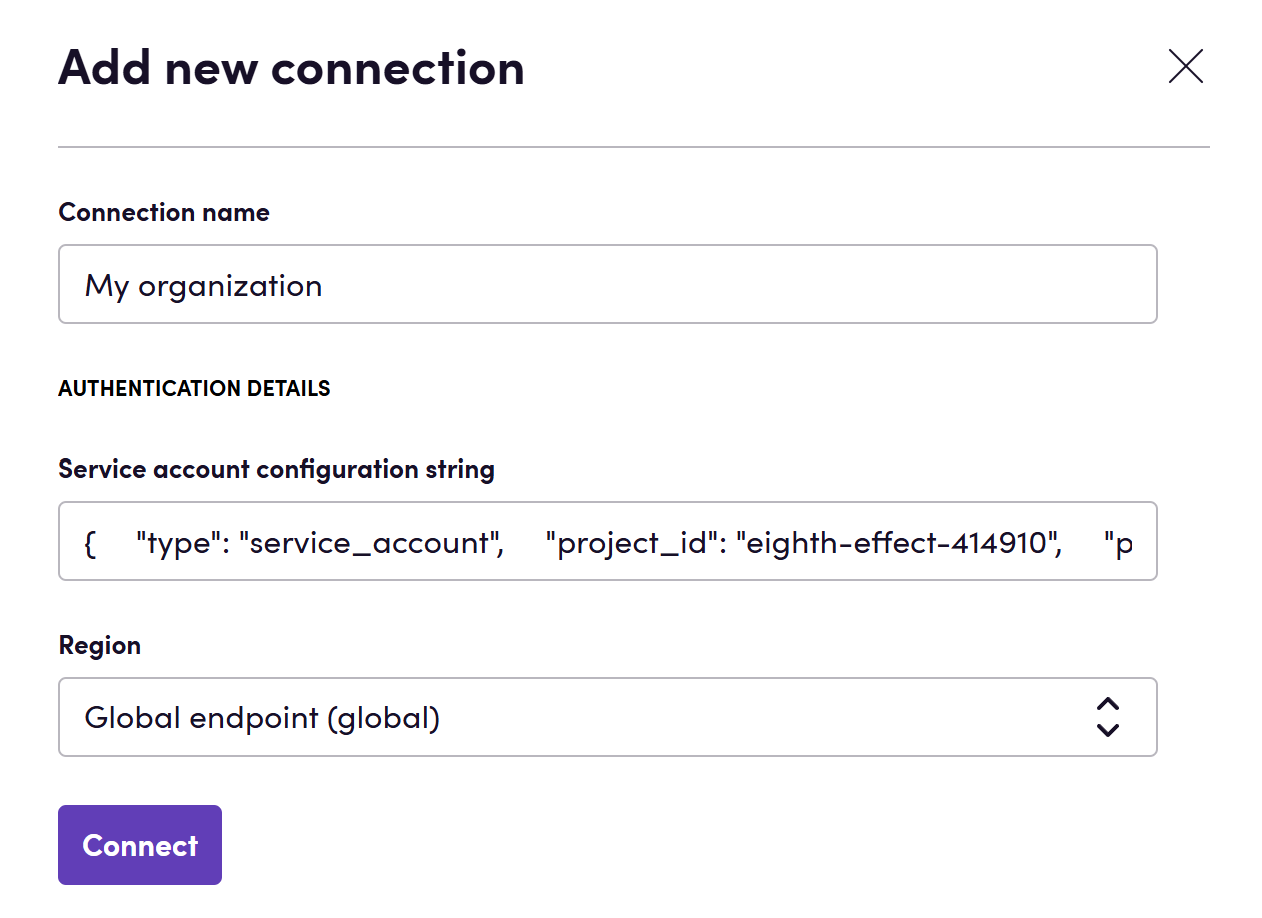

- Name your connection for future reference e.g. ‘My organization’.

- Fill in the JSON configuration string obtained in the previous step.

- Choose the necessary region.

- Click Connect.

- Confirm that the connection has appeared and the status is Connected.

Actions

Generation

- Generate text generates text using Gemini model. Optionally, you can specify an image or video to perform generation with the gemini-1.0-pro-vision model. Both image and video have a size limit of 20 MB. If an image is already present, video cannot be specified and vice versa. Supported image formats are PNG and JPEG, while video formats include MOV, MPEG, MP4, MPG, AVI, WMV, MPEGPS, and FLV. Optionally, set Is Blackbird prompt to True to indicate that the prompt given to the action is the result of one of AI Utilities app’s actions. You can also specify safety categories in the Safety categories input parameter and respective thresholds for them in the Thresholds for safety categories input parameter. If one list has more items than the other, extra ones are ignored.

- Generate text from files generates text using Gemini model with one or more additional file attached to the conversation.

Translation

- Translate translate file content retrieved from a CMS or file storage. The output can be used in compatible Blackbird interoperable actions.

- Translate in background similar to the previous action, except that this creates a long running process in the background not blocking your parallel actions. Use in conjunction with a checkpoint to download the result file of this long running background job.

- Translate text given a text and a locale, tries to create a localized version of the text.

Editing

-

Edit Edit a translation. This action assumes you have previously translated content in Blackbird through any translation action. Only looks at translated segments and will change the segment state to reviewed.

-

Edit in background similar to the previous action, except that this creates a long running process in the background not blocking your parallel actions. Use in conjunction with a checkpoint to download the result file of this long running background job.

-

Edit Text given a source segment and translated target segment, responds with an edited version of the target segment taking into account typical mistakes.

-

Note: files are interoperable, under the hood Blackbird may convert to and from bilingual files with metadata but all our Actions are able to accept a lot of different file formats.

Reporting

- Create MQM report Perform an LQA Analysis of a translated XLIFF file. The result will be a text in the MQM framework form.

- Create MQM report in background similar to the previous action, except that this creates a long running process in the background not blocking your parallel actions. Use in conjunction with a checkpoint to download the result of this long running background job.

Review

- Quality estimation (experimental) evaluates unit and file level translation quality for translated files.

Background

- Download background file use this action to get the output file when doing a translation or edit in the background.

- Get background result use this action to get the output text when generating an MQM report in the backgorund.

Events

Background

- On background job finished triggered when a background job finishes. Use this after stating any background job, then use the applicable action to get the results.

Temperatures

Anthropic allows you to set the temperature value for and Action you perform in Blackbird. You can customize this value (between 0.0 and 1.0) but we’ve also added handy labels that are available in the Blackbird UI:

- 0.1 Governed: Maximum predictability.

- 0.3 Balanced: A controlled blend of accuracy and flexibility.

- 0.5 Expressive: Accuracy is preserved, structure is looser (default).

- 0.6 Exploratory: Encourages alternative phrasings and ideas while remaining context-aware.

- 0.8 Experimental: Prioritizes creativity over predictability.

Important Notes on File Processing

Performance with Large Files: Based on our experience, Gemini models may struggle with files containing more than 100 translation units. Performance can be inconsistent - sometimes working well but often producing hallucinations (returning only one translation unit when 50 were sent) or breaking formatting. For more reliable XLIFF processing, we recommend using background processing. Also, consider specifying a

Bucket sizeof 15-20 or lower to ensure the model can handle the workload effectively.

Notes

In order to work with background actions and events, following permissions are needed:

aiplatform.batchPredictionJobs.create,aiplatform.batchPredictionJobs.get,storage.buckets.create

Bucket size, performance and cost

Files can contain a lot of segments. Each action takes your segments and sends them to the AI app for processing. It’s possible that the amount of segments is so high that the prompt exceeds the model’s context window or that the model takes longer than Blackbird actions are allowed to take. This is why we have introduced the bucket size parameter. You can tweak the bucket size parameter to determine how many segments to send to the AI model at once. This will allow you to split the workload into different API calls. The trade-off is that the same context prompt needs to be send along with each request (which increases the tokens used). From experiments we have found that a bucket size of 1500 is sufficient for models like gpt-4o. That’s why 1500 is the default bucket size, however other models may require different bucket sizes.

Feedback

Do you want to use this app or do you have feedback on our implementation? Reach out to us using the established channels or create an issue.